The 1% Error That Ruins Everything

Why AI agents are mathematically doomed - and what to build instead

In 2025, 42% of companies abandoned most of their AI initiatives - up from just 17% the year before. The culprit wasn’t bad implementation or insufficient data. It was math: a 99% accurate AI agent performing 50 sequential steps succeeds only 60% of the time, and most enterprise workflows require far more than 50 decisions. The industry has been optimizing the wrong variable.

Executives didn’t cancel those programs because the UX was clunky – or that there were too many em-dashes. They canceled them because the systems quietly failed in production, over and over, in ways no one could predict or fix. When it came time to show return on investment, there was nothing to show.

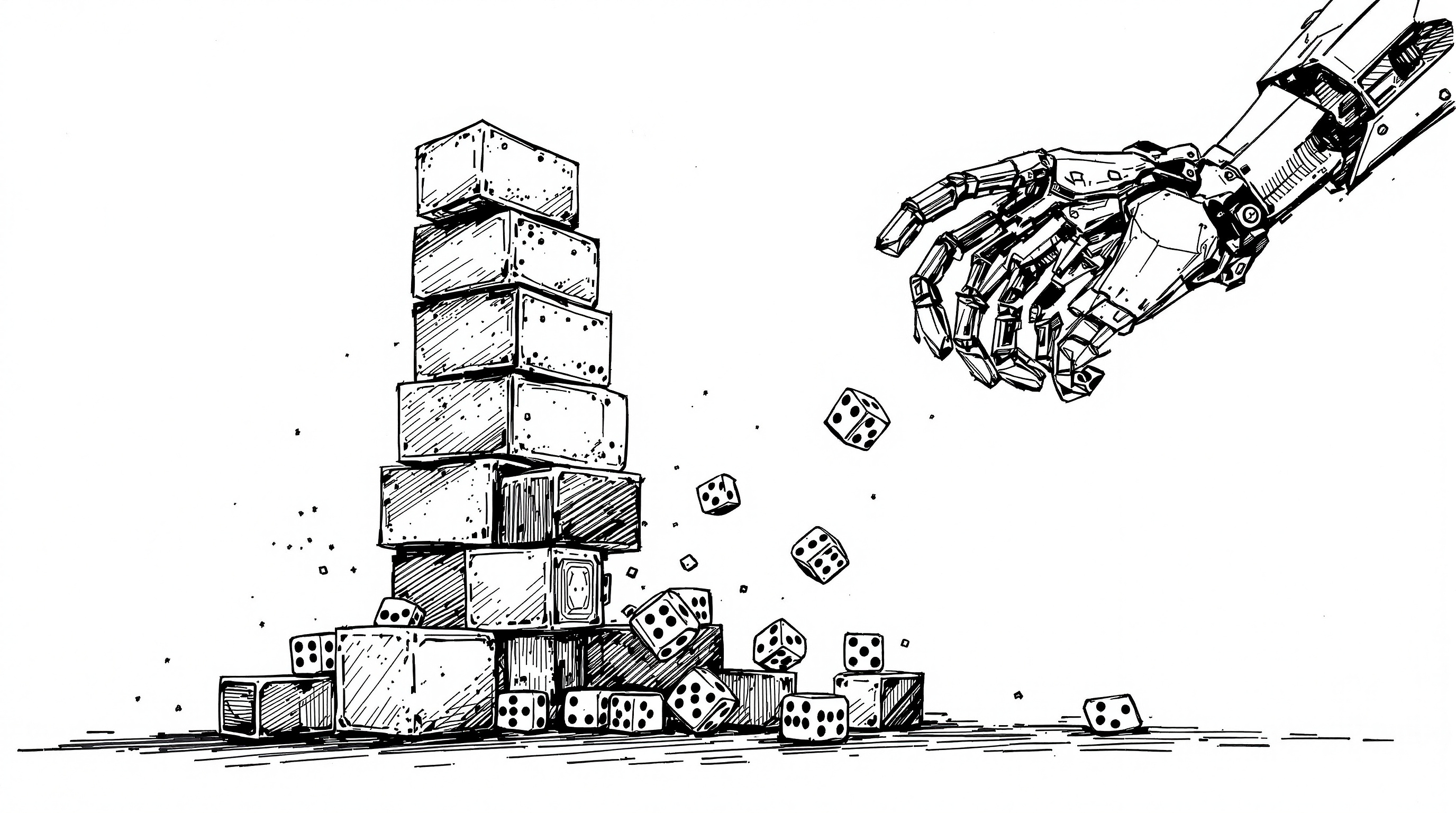

This isn’t a talent problem or a tooling problem. It’s a category error. We’ve been treating probabilistic systems as deterministic infrastructure, expecting software behavior from something that is, at its core, an extremely sophisticated dice roll.

Software either works or it has a bug. Models are different. They’re never fully right and never fully wrong. They are distributions. And that changes everything.

FERZ’s reliability analysis quantified what many engineering teams have learned the hard way. Take five agents, each “pretty good” at 85% accuracy, or one model asked to make five sequential decisions. The system-level reliability isn’t 85%… it collapses to 44%. At ten agents, it drops below 20%.

The situation gets worse as you push models toward autonomy. Every step in a multi-hop plan adds another chance to drift. Sentrix Labs documented a customer service agent that grew 2.7% more verbose every week, doubling response length in six months without anyone noticing. Drift is not a bug. It’s a law of gravity slowly pulling you back down to earth when any stochastic system runs unobserved.

And hallucination isn’t going away. Researchers have shown that hallucination floors don’t vanish with more parameters; it’s baked into how these models work. Throw in retrieval, tools, and external APIs, and you haven’t removed randomness. You’ve just spread it across more components.

Then leaders take this stack and say, “Let’s replace an entire workflow.” What they get isn’t an agent. It’s an unreliable Rube Goldberg machine with a friendly chat UI.

The industry response has been to double down on control: guardrails, policy engines, deterministic validators, human-in-the-loop review. All necessary. None sufficient to rescue the “autonomous agent” dream.

Look closely at the rare “successful” production agents Sentrix and others showcase. Under the hood, you find three things every time: heavy deterministic scaffolding, hard-coded guardrails, and humans quietly cleaning up the mess.

This isn’t deploying sophisticated high-tech AI agents… it’s just rebuilding traditional software around an unpredictable component and calling the whole thing AI-native.

And you pay for it in senior engineers writing harnesses instead of features, in domain experts reviewing outputs more carefully than they review junior staff, in incident response when the agent does something “no one has ever seen before” but was always mathematically possible.

This is the new AI tax. These systems often require more experienced oversight than the people you were planning to replace. Autonomy was supposed to cut headcount. In practice, it drags your most expensive people deeper into the loop.

“But…! Models will get better! We’re just early. You don’t want to get left behind, do you?”

Sure… capability will improve. But, reliability will not converge to software-like behavior. Even at 99.9% step accuracy, a 1,000-step workflow has only a 37% chance of succeeding end-to-end. Most serious business processes span thousands of micro-decisions across systems, contexts, and time. All while software is becoming more and more complex.

The math does not bend to your roadmap.

RAG, fine-tuning, evals, better prompts, better vendors; all of it optimizes parameters inside the same architecture. None of it changes the fact that you’re chaining probabilistic steps and expecting deterministic outcomes.

Just one more roll of the dice.

So the strategic question has to shift. Stop asking, “How do we make agents reliable enough to run this workflow?” Start asking, “Where is AI genuinely good, and where is it a liability?”

Because AI is genuinely good at some things. It’s extraordinary at synthesis, pattern recognition, drafting, exploration, and augmenting human judgment in the moment. It’s terrible at sequential autonomy, consistency over time, and anything where a 1% error rate compounds into chaos.

Knowing the difference is the skill. Having the judgment to deploy AI where it shines - and keep it away from where it doesn’t - is what separates strategy from hype.

Instead of making the agent the star of the show, use AI to help design and build the show. An AI coding assistant will do far more to help you analyze your data and write actual software than trying to untangle the mess of random agentic output. Testable. Updatable. Predictable. Repeatable. Traditional software that does exactly what you need, built faster with AI’s help.

That’s not as sexy as “autonomous agents.” But it works. It ships. It doesn’t delete your production database.

The winners won’t be organizations with the most agents. They’ll be the ones with the judgment to know when AI is the tool and when it’s the trap.

The 42% knew something the optimists didn’t: you can’t debug probability into certainty.

How did you like this article?

Enjoyed this article? Subscribe to get weekly insights on AI, technology strategy, and leadership. Completely free.

Subscribe for Free